Intel Optane SSD: Scientific Applications

Since July, anyone interested has been able to test out an Intel Optane SSD free of charge (an article detailing the benefits and advantages of this new disk can be found in our blog).

Since July, anyone interested has been able to test out an Intel Optane SSD free of charge (an article detailing the benefits and advantages of this new disk can be found in our blog).

There were quite a few interesting projects, including those in the field of science.

Below, we’ll be looking at a few cases where these disks were used for scientific computations and applications.

Distributed Search for Gravitational Waves

This case was co-cauthored by Aleksandr Vinogradoviy, Sotsium Private Pension Fund, Nizhniy Novgorod.

Our server with the Intel Optane SSD was perfect for solving tasks for the Einstein@Home project, a distributed search for gravitational waves. This is a memory-intensive project and if there’s not enough memory or it’s not fast enough, then unit calculations will drag: instead of 12 hours, they may take a few days. On the server we tested, there were no hesitations and the maximum execution time was 18 hours.

By the way, the Nobel Prize for Physics this year was given to Barry Berish, Rainer Weiss, and Kip Thorne “for decisive contributions to the LIGO detector and the observation of gravitational waves”.

Einstein@Home

Einstein@Home is one of the many distributed computing projects on the BOINC platform. It can be launched on almost any existing processor and enthusiasts run it on absolutely everything they have.

Einstein@Home itself processes data from the LIGO gravitational wave detector, and unlike SETI@Home, which was the first in the world to implement distributed calculations in 1999, it requires a lot of memory—up to 1.5 GB for one stream. In other words, you won’t be looking for gravitational waves on your phone or home laptop.

The BOINC platform lets you automate computations:

- set the maximum processor workload for BOINC tasks and limit processor availability for others

- define the number of processor threads to use

- set when tasks are launched

- set the traffic volume

This is the perfect tool for testing a multi-core processor.

In one week, we were able to calculate over 300 units for Einstein@Home. To compare, it took another machine 24 days to just barely calculate four; the others failed.

In 1999, I saw an article in “Computerra” about SETI@Home. I thought it was interesting and tried to launch it myself. No luck: the Pentium MMX 200 processor couldn’t handle it. I was able to connect in the spring of 2001 after getting one of the first Celerons.

I’ve been interested in science fiction, space, and the search for extraterrestrials practically since birth—since 1983 (it seems) when they showed the film “Hangar 18” on Channel 1. And I learned about SETI at about the same time from an episode of “Visible-Unbelievable” with Kapitsa. There was a short story about CETI, where everything got its start, and an explanation about all of the variables in the Drake Equation. Then I found a book of Sklovsky’s and materials from the 1970 conference in Byurakan, where they said “one hundred billion dollars on the search for intelligence isn’t really all that much.”

At least that’s what they thought in 1970. Elon Musk and the ISS weren’t around in 1999; there was just Mir orbiting the planet and Voyager flying off to Pluto. Financing for even the most serious space programs was plummeting worldwide, but here we had something that was the absolute least serious: a few students wrote a screensaver that didn’t just draw pipes or stars, but used data from a telescope in Arecibo as the base for its picture! Because of this serious screensaver we have all of today’s cloud technology, blockchains, and other distributed mathematics. Even the idea of using video cards to perform calculations was first implemented on the BOINC platform.

It became almost immediately evident that the total (combined) power of enthusiasts’ weaker computers exceeded the best existing supercomputers. Scientists got involved, multiple distributed computing projects began to appear, and the BOINC platform was created for managing them. Since then, millions of machines have participated in the search for the Higgs Boson (LHC@Home), in capturing gravitational waves (Einstein@Home), and in creating a three-dimensional map of the galaxy (MilkyWay@Home).

But the most important project for me was SETI@Home—the least serious and most fruitless project, but still the very first where everything got started. The project is now having a hard time—people with powerful computers are leaving to mine cryptocurrency, the telescope in Arecibo almost closed a few years ago (scientific spending isn’t a particularly popular topic in America’s budget), and on September 21, the radio telescope was completely knocked out by hurricane Maria. But the project is still in the lead; it’s now sorting data about a very interesting system, KIC8462852, and the BOINC platform is still the world’s most powerful supercomputer.

Intel Optane SSD Read and Write Tests

Aleksandr used fio to test the Intel Optane with at IO depth of 16 and 32 (only a few tests were run on the HDD as they took longer than the SSD).

Tests recreated full disk workloads for a specific queue length and all parameters were measured.

Three tests were performed in total. Their results are shown in the tables below:

| runtime, msec | ||||||

| Read | Write | Read+Write | ||||

| iodepth=16 | iodepth=32 | iodepth=16 | iodepth=32 | iodepth=16 | iodepth=32 | |

| HDD | — | 1 850 557 | 4 577 666 | — | — | — |

| SSD | 299 688 | 481 744 | 259 161 | 512 864 | R:378001, W:474573 | R:431930, W:498782 |

| average response time (clat), msec | ||||||

| Read | Write | Read+Write | ||||

| iodepth=16 | iodepth=32 | iodepth=16 | iodepth=32 | iodepth=16 | iodepth=32 | |

| HDD | — | 496.75 | 614.54 | — | — | — |

| SSD | 49.50 | 163.47 | 42.99 | 174.08 | R:62.38, W:78.37 | R:146.62, W:169.36 |

Where the HDD could not handle a task, lagging by almost half a second per request, latency for the SSD remained at 0.2 seconds.

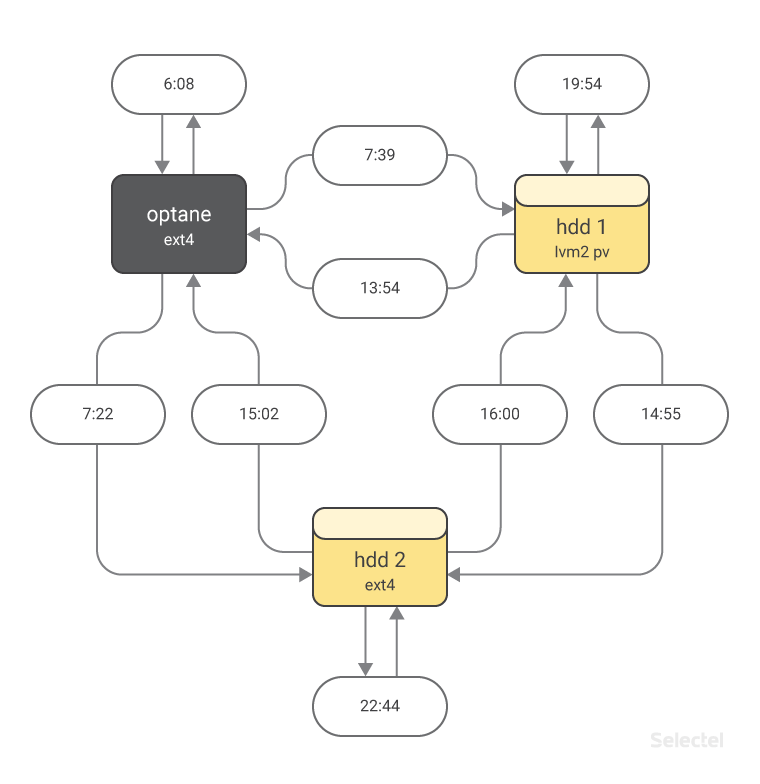

Then we decided to just copy a 150 GB file from each device onto one another, including itself. Here are the results (in minutes and seconds). This ended up being more of a full server test, but what was most surprising to me was not the time it took to copy to the SSD, but how quickly the regular disks worked in a calm environment.

Aleksandr’s detailed report on these tests (Russian).

Optimizing Algorithms in the Field of Computational Electrodynamics

This case was co-written by Vyacheslav Kizimenko, Research Department at Belarusian State University of Informatics and Radioelectronics.

Since its initial release, a fairly large number of reviews and professional evaluations of Intel Optane SSD performance have been published. Still, it’s always nicer to actually get your hands on something new and to use it for running your own tasks. Selectel graciously gave us this opportunity.

Systems of linear algebraic equations are used to model a large number of different physical processes (like analyzing the flow of viscous incompressible fluids in aerodynamics). When optimizing our algorithms, we strive to reduce modeling time as much as possible. This is done by paralleling algorithms and using multi-processor systems or video cards that support CUDA and OpenCL technology.

In solving combined equations, we have to store high-dimension matrices on hard disks. Anyone who models physical processes knows that hard disk performance is a common bottleneck.

That kind of matrix is often used multiple times for further computations or for optimizing modeling devices. To put it simply, after optimizing all of your algorithms as much as possible, it’s a shame to lose time just saving data.

Our numerical algorithm is based on the method of moments, which suggests that a base object (such as a microstrip antenna) can be broken down into multiple fundamental segments.

We measured the total time it took to read and write various sized matrix blocks.

For real tasks, we save matrices with equation coefficients on a disk. These are actually arrays of double variables. To speed up the testing process, I generated these kinds of arrays and filled in random double numbers. Afterwards, we measured the total time it took to read and write the data array.

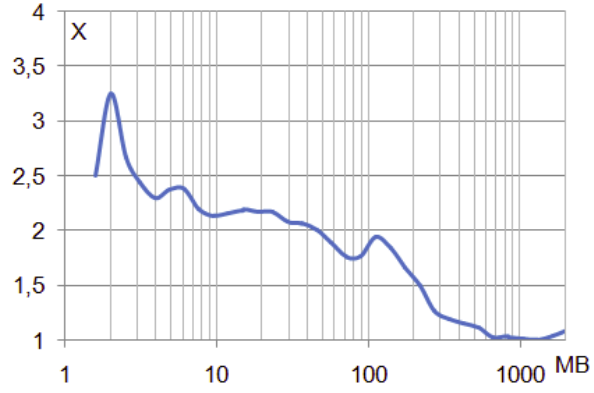

We compared the Intel Optane SSD to a standard SSD, measuring the difference in time for saving block matrices (for write tests, we compared the Optane to the Intel SC2BB48 SSD).

The graph below shows how many times faster the Intel Optane completed this task (in other words, the result of dividing the time it took the Intel Optane in milliseconds by the time it took the standard SSD).

Tests were performed on a server built with the Intel E5-2630v4 processor, Intel Optane SSD DC P4800X (375 GB), Intel SSD SC2BB48 (480 GB), and 64 GB RAM.

From the graph we can see that the Intel Optane SSD was practically twice as fast as the regular SSD when saving matrix blocks up to 110 MB, and at some points (2 MB) it was 3.25 times faster.

These results show that the new Intel Optane SSD is not only interesting for hosting service users, but any specialist who processes and saves large amounts of data or models and projects processes.

Instead of a Conclusion…

If you have any other interesting ideas of how to use the Optane, you’re welcome to test it out. The promotion is still active and everyone is invited to test drive the Intel Optane SSD for free.