Curious Cases in Working with Servers

Despite how new something is or how long it’s been working under a full workload, equipment sometimes starts to act a bit unpredictably. Servers are no exception.

Despite how new something is or how long it’s been working under a full workload, equipment sometimes starts to act a bit unpredictably. Servers are no exception.

Crashes and malfunctions happen, and oftentimes, what should just be simple troubleshooting turns into a time-consuming whodunit.

Below, we’ll be looking at a few interesting and curious examples of how servers have misbehaved, and what was done to get them back in order.

Identifying Errors

More times than not, issues are registered only after clients submit a ticket to tech support.

When our Dedicated Server clients contact us, we run a free diagnosis to ensure the problem isn’t software-related.

Software-related issues are usually solved by the clients themselves, but never the less, we always offer the assistance of our system administrators.

If it becomes evident that the problem is caused by the hardware (if the server doesn’t recognize a portion of RAM, for example), then we always have spare server platforms available.

For hardware-related issues, we transfer disks from malfunctioning servers to a backup server and after a few quick adjustments to the network equipment, redeploy the server. This way no data is lost and downtime is kept to under 20 minutes (starting the moment the relevant ticket is submitted).

Malfunctions and Troubleshooting Methods

Network Malfunctions on a Server

It’s always possible that after transferring a disk from a crashed server to a backup server, the network will not work. This usually happens with Linux-based operating systems, like Debian or Ubuntu.

The reason is that during the initial OS installation, the MAC address of the network card is written to a special file: /etc/udev/rules.d/70-persistent-net.rules.

When the operating system loads, this file maps the interface names to the MAC addresses. When you swap servers, the MAC address of the network interface no longer matches the file, and you end up without a network connection.

To solve this issue, the aforementioned file has to first be deleted and then the network service relaunched or server rebooted.

When the operating system can’t find the file, a similar file will be generated, mapping the interfaces with the MAC address of the new network card.

In this case, there’s no need to reconfigure IP addresses as the network will immediately work.

Wandering Errors and Hangs

We once had to diagnose a server that would randomly hang. We checked the BIOS and IPMI logs—empty, no errors. We ran a stress test, pushing all of the processor cores to 100% while monitoring the temperature; it hung with no chance of recovery after 30 minutes.

The processor was operating just fine, the temperature didn’t exceed acceptable values when under load, and all of the fans were in working order. It became clear that overheating wasn’t the issue.

Next we had to exclude the possibility of defective RAM modules, so we ran a memory test with Memtest86+. After 20 minutes, the server unsurprisingly hung and returned an error for one of the RAM modules.

We replaced the defective module with a new one, ran the test again, but were met by yet another fiasco: the server hung and returned an error for a different RAM module. We replaced it, ran another test, and again it hung and returned a memory error. A careful inspection of the RAM slots didn’t reveal any defects.

The only other possible culprit was the CPU. The RAM controller is located inside the processor, and that could be the cause of our problem.

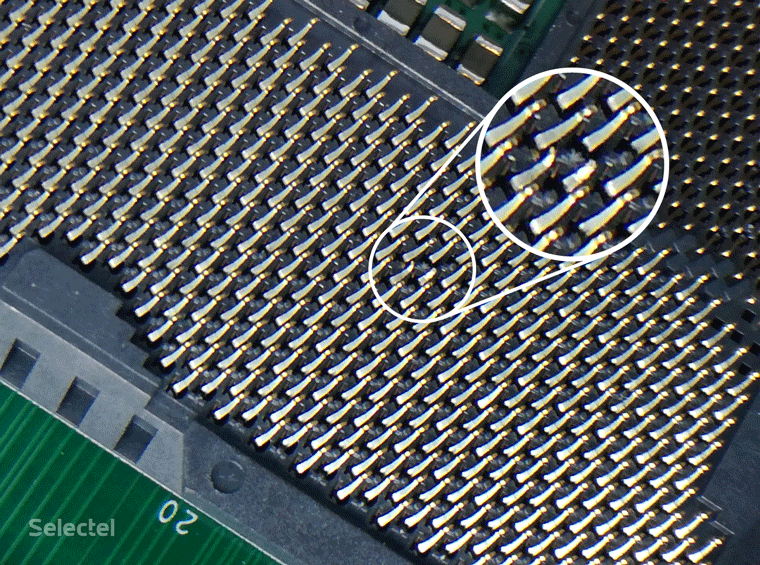

After removing the processor, what we found was catastrophic—the top part of one of the socket pins had broken; the tip was literally stuck to the contact area of the processor. As it turns out, when the server wasn’t under any load, everything worked more or less fine, but when the temperature of the processor started to rise, contact was lost, disrupting the RAM controller and causing the system to hang.

The problem was finally solved by replacing the motherboard; restoring a broken pin in a socket is, alas, beyond us and a task better suited for a service center.

False Hangs during OS Installations

Funny things start to happen when hardware manufacturers start changing architectures that aren’t backwards compatible with older technology.

We had a user complain that their server would hang when they tried to install Windows Server 2008 R2. After successfully launching the installation wizard, the server would stop responding to the mouse and keyboard in the KVM console. To localize the problem, we connected a physical mouse and keyboard to the server, but got the same problem: the wizard would launch but the server wouldn’t respond to input devices.

At that time, this was one of the first servers built on the Supermicro X11SL-F motherboards. There was an interesting parameter in the BIOS settings: Windows 7 install, which was set to Disable. Since Windows 7, 2008, and 2008 R2 are deployed from the same installer, we changed this to Enable and miraculously the mouse and keyboard finally started working. But this was only the beginning.

When it was time to choose a disk to install to, none of the disks were showing up; moreover, an error popped up, saying additional drivers had to be installed. The operating system was being installed from a USB flash drive, and a quick Internet search showed that this error occurs when the installation program can’t find the USB 3.0 controller drivers.

We read on Wikipedia that the issue could be resolved by disabling USB 3.0 support (the XHCI controller) in the BIOS. Boy were we surprised when we opened up the motherboard documentation: the developers had decided to completely do away with EHCI (Enhanced Host Controller Interface) controller support and replace it solely with XHCI (eXtensible Host Controller Interface) support. In other words, all of the USB ports on this motherboard were USB 3.0. So, if we had disabled the XHCI controller, then we would have disabled the input devices, making it impossible to use the server and consequently install the OS.

Since the server platform wasn’t equipped with a CD/DVD drive, the only solution was to integrate the drivers directly with the OS image. Only after we integrated the USB 3.0 controller drivers and recompile the image could we install Windows Server 2008 R2 on the server. This was added to our knowledge base to save our engineers from wasting their time on similar issues.

Surprise Feature of the Dell PowerVault

It’s also a hoot when clients bring us equipment for colocation that doesn’t behave as expected. That was the case with the Dell PowerVault disk array.

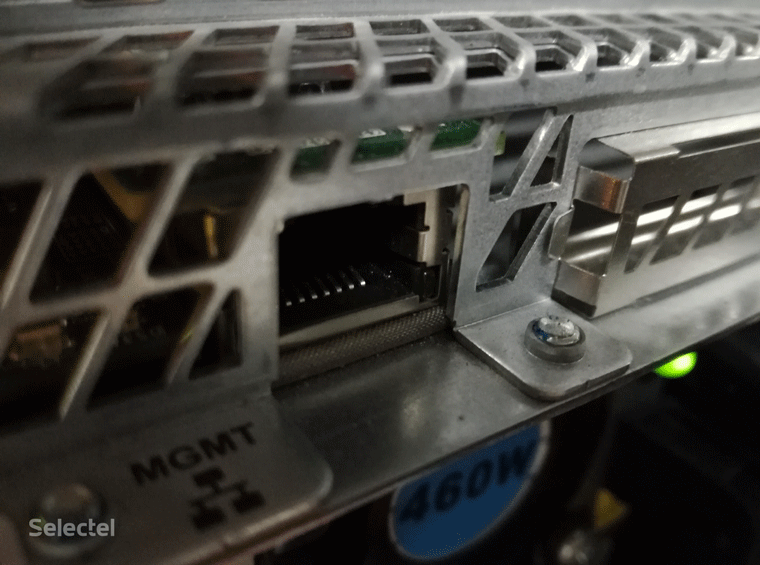

The device is a data storage system with two disk controllers and iSCSI network interfaces. These interfaces also have MGMT ports for remote management.

We offer additional services for colocation, including Additional port: 10 Mbps, which can be ordered if you need to connect remote server management tools. These tools go by different names:

- iLo by Hewlett-Packard

- iDrac by Dell

- IPMI by Supermicro

They all have roughly the same functions: server status monitoring and remote console access. They don’t require a high-speed channel; 10 Mbps is enough to work comfortably. This is what the client ordered. We laid the necessary copper cross connect and configured the port on our network equipment.

To limit speed, the port is simply configured as 10BASE-T and enabled with a maximum bandwidth of 10 Mbps. When everything was set and ready, we connected the array, but the client reported almost immediately that it wasn’t working.

After checking the status of the switch port, we found an unpleasant message: Physical link is down. This means there’s a problem with the physical connection between the switch and the client’s equipment it’s connected to.

A poorly crimped connector, broken plug, frayed wire in the cable—those are just some of the things that could cause a missing link. Our engineers naturally got out the crossover cable tester and checked the connection. All of the wires had ideal continuity and both ends were crimped perfectly. When we connected the cable to a laptop, we got the anticipated 10 Mbps connection. It became clear that the problem was with the client’s equipment.

Since we always try to help our clients solve their issues, we decided to figure out what exactly was interfering with the link. We carefully inspected the MGMT port socket: everything was in order.

On the manufacturer’s site, we look up the original operating instructions to find out if it could be software killing the port. It turns out that wasn’t possible; the port activates automatically. Despite the fact that this kind of equipment should always support Auto-MDI(X), or in other words properly determine what kind of cable is connected: typical or crossover, we crimped a crossover and connected it to the switch port for the sake of experimenting. We tried forcing the duplex setting on the switch port. No result; there was still no link and we were out of ideas.

Then one of our engineers made a suggestion that completely defied logic. He suggested that the equipment doesn’t support 10BASE-T and would only work with 100BASE-TX or maybe even 1000BASE-X. Usually any port, even on the cheapest equipment, is 10BASE-T compatible, and the suggestion was initially ruled out as nonsense, but with no other options, we decided to try reconnecting the port in 100BASE-TX.

Words cannot begin to describe our astonishment; the link was immediately picked up. It remains a mystery why exactly the MGMT port didn’t support 10BASE-T; this is extremely rare, but can happen.

The client was no less surprised than we were and very thankful that the problem was solved. We naturally left the 100BASE-TX port and limited the speed right in the integrated throttling mechanism.

Fan Blade Failure

Once a client came to us, asked us to unmount his server, and take it to the service area. The engineers left him alone with his equipment. One hour went by, then two, then three. The whole time the client just kept booting up his server and shutting it down, and we of course wanted to know what the problem was.

It turns out that two of the six cooling fans in the Hewlett-Packard server weren’t working. The server would turn on, return a cooling error, and then turn off. In this case, the server was host to a hypervisor running critical services. To get the service up and running again, the virtual machines had to be moved to another physical node.

This is how we decided to help the client. A server normally understands that a fan is working properly by counting the number of rotations. Naturally, the engineers at Hewlett-Packard did everything they could to prevent the original fan from being replaced by a similar part—irregular connectors, irregular pin assignments.

The original part cost around $100 and you couldn’t just go out and buy one; you had to order it from abroad. Thanks to the Internet, we found the schematics of the original pin layout and discovered that a single pin was responsible for counting the number of rotations per second.

Things then got technical. We took a couple of wires for prototyping (the odds of success were in our favor, some of engineers are Arduino enthusiasts) and just connected the pins from the neighboring working turbines to the connectors of the broken ones. The server booted up and the client was able to migrate his virtual machines and relaunch his services.

It goes without saying that all of this was performed with the client’s blessing, but never the less, this atypical approach let him reduce downtime to a minimum.

Where’d the Disks Go?

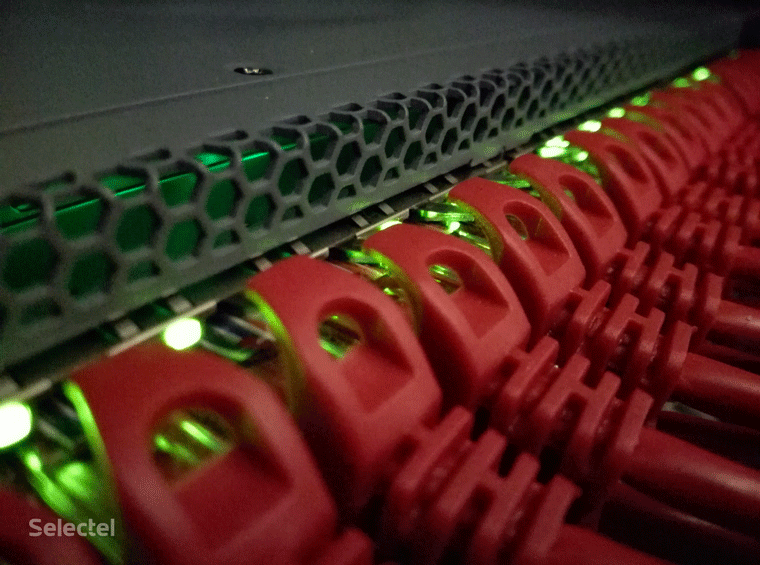

Sometimes the cause of the problem is so far out in left field that loads more time is spent finding it than fixing it. That’s how it was when one of our clients complained that disks would randomly drop and the server hang. The hardware platform was a Supermicro 847 chassis (form factor 4U) with baskets for 36 disks. Three identical Adaptec RAID controllers were installed in the server with 12 disks in each one. When the problem occurred, the server would stop seeing a random number of disks and hang. The server was taken out of production and handed over for diagnostics.

The first thing we were able to determine was that the disks would crash only on one controller. Here, the “dropped disks” would disappear from the native Adaptec management tool and they would only appear again after fully disconnecting and reconnecting the server to its power source. The first thing that came to mind was the controller’s software. All three controllers had different firmware, so it was decided to install the same version on all of them. After the changes were made, we pushed the server to the maximum workload; everything worked fine. We marked the issue as resolved and gave the server back to the client for production.

Two weeks later, we got a message about the same problem. This time we decided to replace the controller with a similar one. We made the changes, upgraded the firmware, connected it, and started testing. Same problem. Like clockwork, all of the disks failed on the new controller and the server hung two days later.

We installed the controller in a different slot, replaced the backplane and SATA cables from the controller to the backplane. A week of testing and again the disks would fail and server hang. Contacting Adaptec tech support didn’t yield any results; they tested all three controllers and didn’t find a single problem. We replaced the motherboard—practically fully rebuilt the platform. Anything that caused an iota of doubt was replaced with a new one. Yet again we were met with the same problem. It had to have been sorcery.

We were able to solve the problem completely by chance when we started checking each disk individually. Under a specific load, the heads on one of the disks would start to click and short the SATA port, but for some reason there were no indicators. The controller here would stop seeing that section of disks and would start only after reconnecting its power supply. That’s how a single broken disk was able to take down a full server platform.

Conclusion

This is just a small look at some of the interesting situations our engineers have handled. Nailing down some problems is far from easy, especially when the log doesn’t offer any insight. However, these sorts of issues push our engineers to really dive into the server equipment and find all sorts of ways to solve problems.

All of the stories we talked about today are true and happened here at Selectel.

What sorts of issues have you run into? Share your stories with us in the comments below.